A Guide to Data Consolidation: Prioritizing Tokens for Accurate and Efficient Updates

Data consolidation is a crucial aspect of managing and organizing data within a system. It involves the merging and prioritization of data from multiple sources or fields to create a unified and up-to-date dataset. In this article, we will explore the process of data flow and how to prioritize tokens to ensure accurate and efficient data updates.

If you don’t know how our interface that is showing your integrations works, then we recommend you first take a look at our article on Data Flow view.

Video

Token Prioritization:

Before diving into the data consolidation process, it is essential to have an API token created. If you are unsure about how to create your own token, please refer to the documentation on token creation.

It is important to note that token prioritization only affects the overwriting of data and has no impact on data creation. Therefore, it specifically applies to “update” scenarios. Tokens do not affect the manual overwriting of data in the system, and data can always be overwritten manually regardless of token prioritization.

Data Consolidation Process:

To consolidate your data, follow the steps outlined below:

- Open the module or addon where you want to consolidate your data.

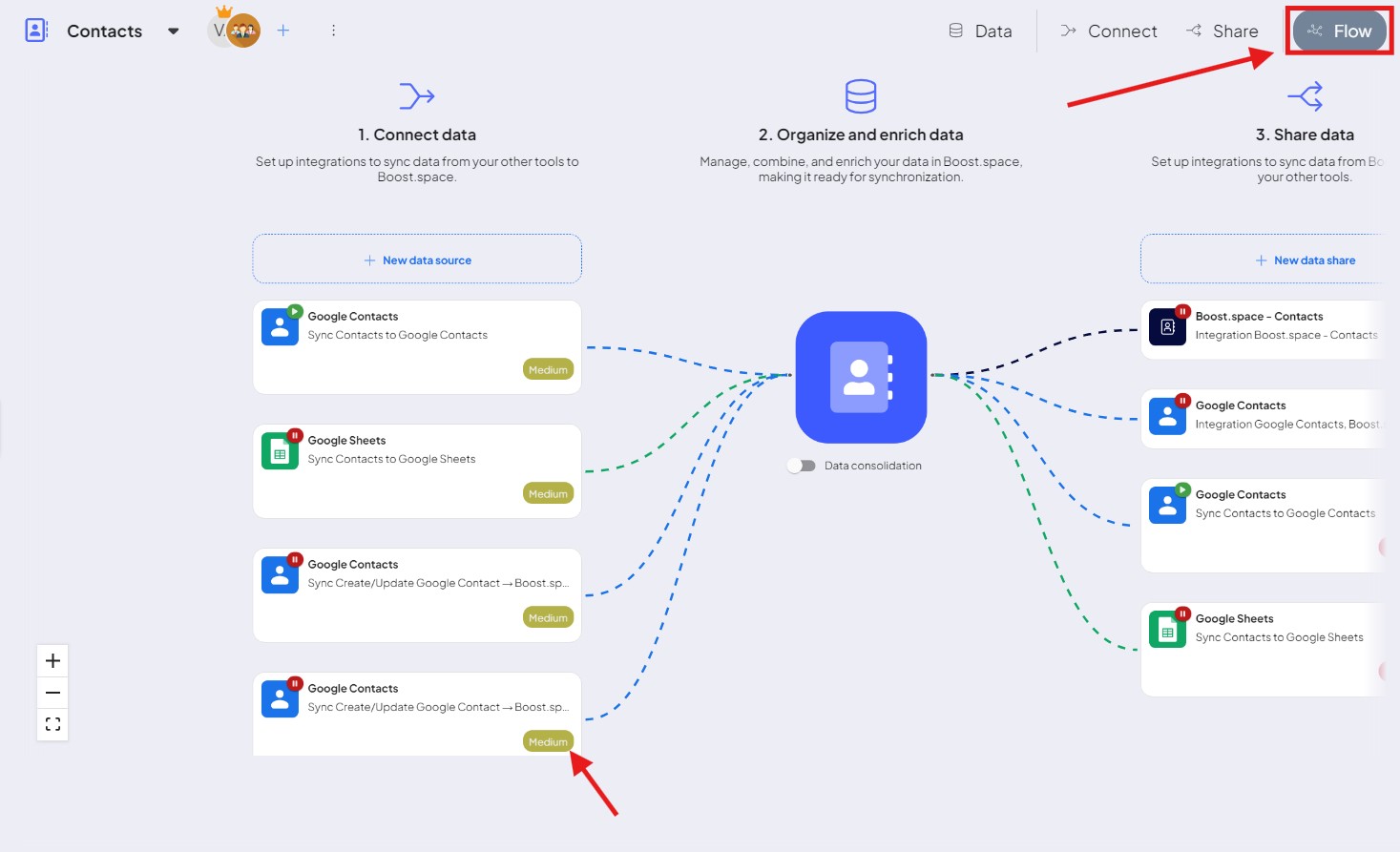

- Locate the “Flow” option in the top bar and click on it.

- In the first column, you can select the priority of the token.

Token Prioritization Interface:

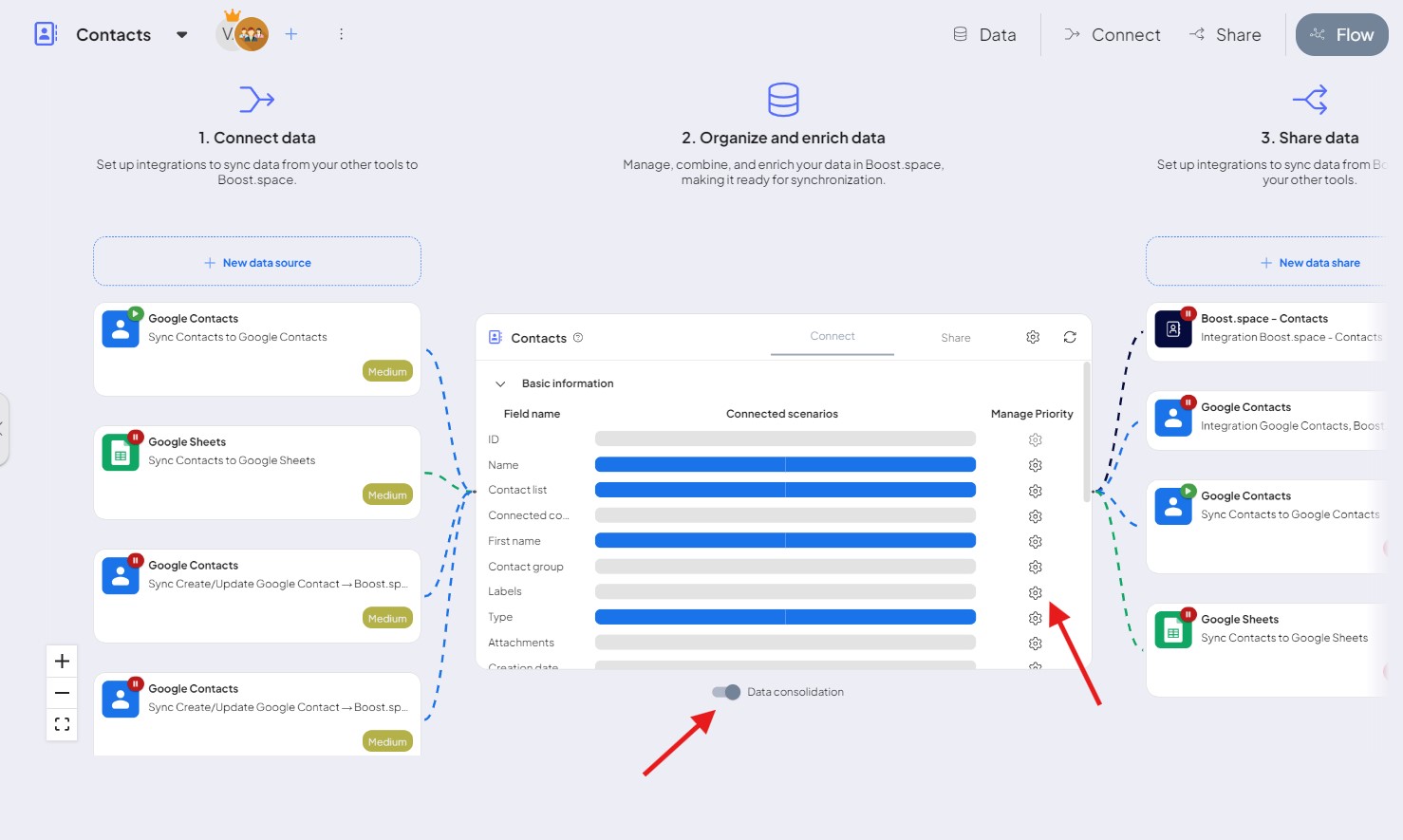

Upon clicking the “Flow” option, a table will appear in the middle of the interface. Click on the button to open the function of Data consolidation. This table allows you to select the data you wish to consolidate by field name and provides options for further customization.

- Select the desired data to consolidate by a field name in the table.

- Click on the gear wheel icon to access the token prioritization interface.

In the token prioritization interface, you will find a table displaying the priorities assigned to each token. The available priorities are: lowest, lower, low, medium, high, higher, and highest. You can edit these priorities as per your requirements.

Other Adjustable Settings:

The token prioritization interface also offers additional adjustable settings to enhance your data consolidation process. These settings include:

- Navigating to specific scenarios: By double-clicking on a scenario, you will be directed to that particular scenario in the Integrator, facilitating a focused review and adjustment process.

- Data import and export toggling: You can switch between data import and export views within the table. However, it’s important to note that token priority is only relevant for data import scenarios.

Conclusion:

Data consolidation is a vital process for maintaining accurate and up-to-date datasets. By following the steps outlined in this article and leveraging token prioritization, you can efficiently consolidate your data and ensure seamless updates. Remember that tokens do not affect manual data overwriting, and data can always be overwritten manually if needed.

For further assistance or detailed guidance on specific scenarios, please refer to the appropriate sections of the Integrator documentation or contact our support team at [email protected].