- Google Cloud Speech

- Connect Google Cloud Speech to Boost.space Integrator

- Create and configure a Google Cloud Platform project for Google Cloud Speech

- Create a Google Cloud Platform project for Google Cloud Speech

- Enable APIs for Google Cloud Platform

- Configure your OAuth consent screen for Google Cloud Platform

- Create your Google Cloud Speech client credentials

- Establish the connection with Google Cloud Speech in Boost.space Integrator

- Build Google Cloud Speech Scenarios

- Connect Google Cloud Speech to Boost.space Integrator

With Google Cloud Speech modules in Boost.space Integrator, you can handle speech recognition in your Google Cloud Speech account.

To use the Google Cloud Speech modules, you must have a Google account, and a Google Cloud Speech project created in your Google Cloud Platfrom. You can create an account at accounts.google.com.

Refer to the Google Cloud Speech API documentation for a list of available endpoints.

![[Note]](https://docs.boost.space/wp-content/themes/bsdocs/docs-parser/HTML/css/image/note.png) |

Note |

|---|---|

| Boost.space Integrator‘s use and transfer of information received from Google APIs to any other app will adhere to Google API Services User Data Policy. |

To establish the connection, you must:

Before you establish the connection in Boost.space Integrator, you must create and configure a project in the Google Cloud Platform to obtain your client credentials.

To create a Google Cloud Platform project:

- Log in to the Google Cloud Platform using your Google credentials.

- On the welcome page, click Create or select a project > New project. If you already have a project, proceed to the step 5.

- Enter a Project name and select the Location for your project.

- Click Create.

- In the top menu, check if your new project is selected in the Select a project dropdown. If not, select the project you just created.

![[Note]](https://docs.boost.space/wp-content/themes/bsdocs/docs-parser/HTML/css/image/note.png) |

Note |

|---|---|

To create a new project or work in the existing one, you need to have the serviceusage.services.enable permission. If you don’t have this permission, ask the Google Cloud Platform Project Owner or Project IAM Admin to grant it to you. |

To enable the required APIs:

- Open the left navigation menu and go to APIs & Services > Library.

- Search for the following API: Cloud Resource Manager API.

- Click Cloud Resource Manager API, then click Enable. If you see the Manage button instead of the Enable button, you can proceed to the next step: the API is already enabled.

To configure your OAuth consent screen:

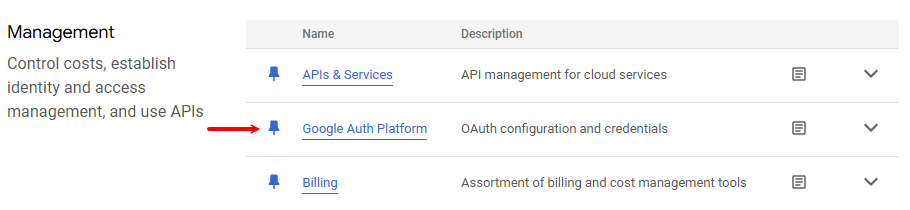

- In the left sidebar, click Google Auth Platform.

- Click Get Started.

- In the Overview section, under App information, enter Make as the app name and provide your Gmail address. Click Next.

- Under Audience, select External. Click Next.For more information regarding user types, refer to Google’s Exceptions to verification requirements documentation.

- Under Contact Information, enter your Gmail address. Click Next.

- Under Finish, agree to the Google User Data Policy.

- Click Continue > Create.

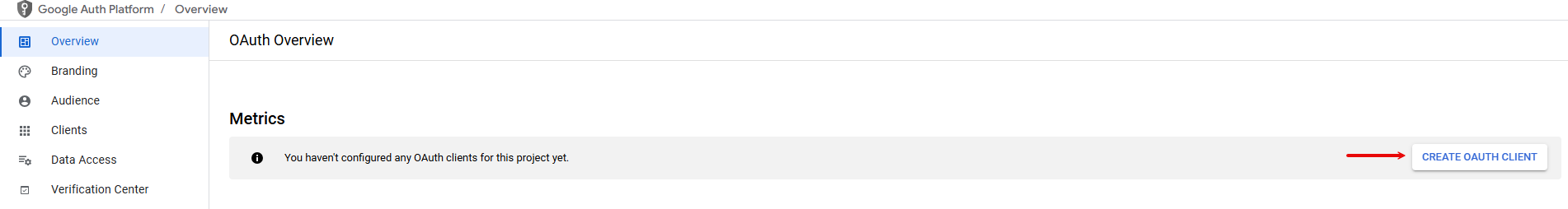

- Click Create OAuth Client.

- In the Branding section, under Authorized domains, add

make.comandboost.space. Click Save. - Optional: In the Audience section, add your Gmail address on the Test users page, then click Save and continue if you want the project to remain in the Testing publishing status. Read the note below to learn more about the publishing status.

- In the Data Access section, click Add or remove scopes, add the following scope, and click Update:

https://www.googleapis.com/auth/cloud-platform - Click Save.

![[Note]](https://docs.boost.space/wp-content/themes/bsdocs/docs-parser/HTML/css/image/note.png) |

Note |

|---|---|

| Publishing Status

Testing: If you keep your project in the Testing status, you will be required to reauthorize your connection in Boost.space Integrator every week. To avoid weekly reauthorization, update the project status to In production. In production: If you update your project to the In production status, you will not be required to reauthorize the connection weekly. To update your project’s status, go to the Google Auth Platform, the Audience section, and click Publish app. If you see the notice Needs verification, you can choose whether to go through the Google verification process for the app or to connect to your unverified app. Currently connecting to unverified apps works in Boost.space Integrator, but we cannot guarantee the Google will allow connections to unverified apps for an indefinite period. For more information regarding the publishing status, refer to the Publishing status section of Google’s Setting up your OAuth consent screen help. |

To create your client credentials:

- In Google Auth Platform, click Clients.

- Click + Create Client.

- In the Application type dropdown, select Web application.

- Update the Name of your OAuth client. This will help you identify it in the platform.

- In the Authorized redirect URIs section, click + Add URI and enter the following redirect URI:

https://integrator.boost.space/oauth/cb/google-cloud-speech. - Click Create.

- Click the OAuth 2.0 Client you created, copy your Client ID and Client secret values, and store them in a safe place.

You will use these values in the Client ID and Client Secret fields in Boost.space Integrator.

To establish the connection in Boost.space Integrator:

- Log in to your Boost.space Integrator account, add a Google Cloud Speech module to your scenario, and click Create a connection.

- Optional: In the Connection name field, enter a name for the connection.

- In the Client ID and Client Secret fields, enter the values you copied in the Create your Google Cloud Speech client credentials section above.

- Click Sign in with Google.

- If prompted, authenticate your account and confirm access.

You have successfully established the connection. You can now edit your scenario and add more Google Cloud Speech modules. If your connection requires reauthorization at any point, follow the connection renewal steps here.

NOTE:

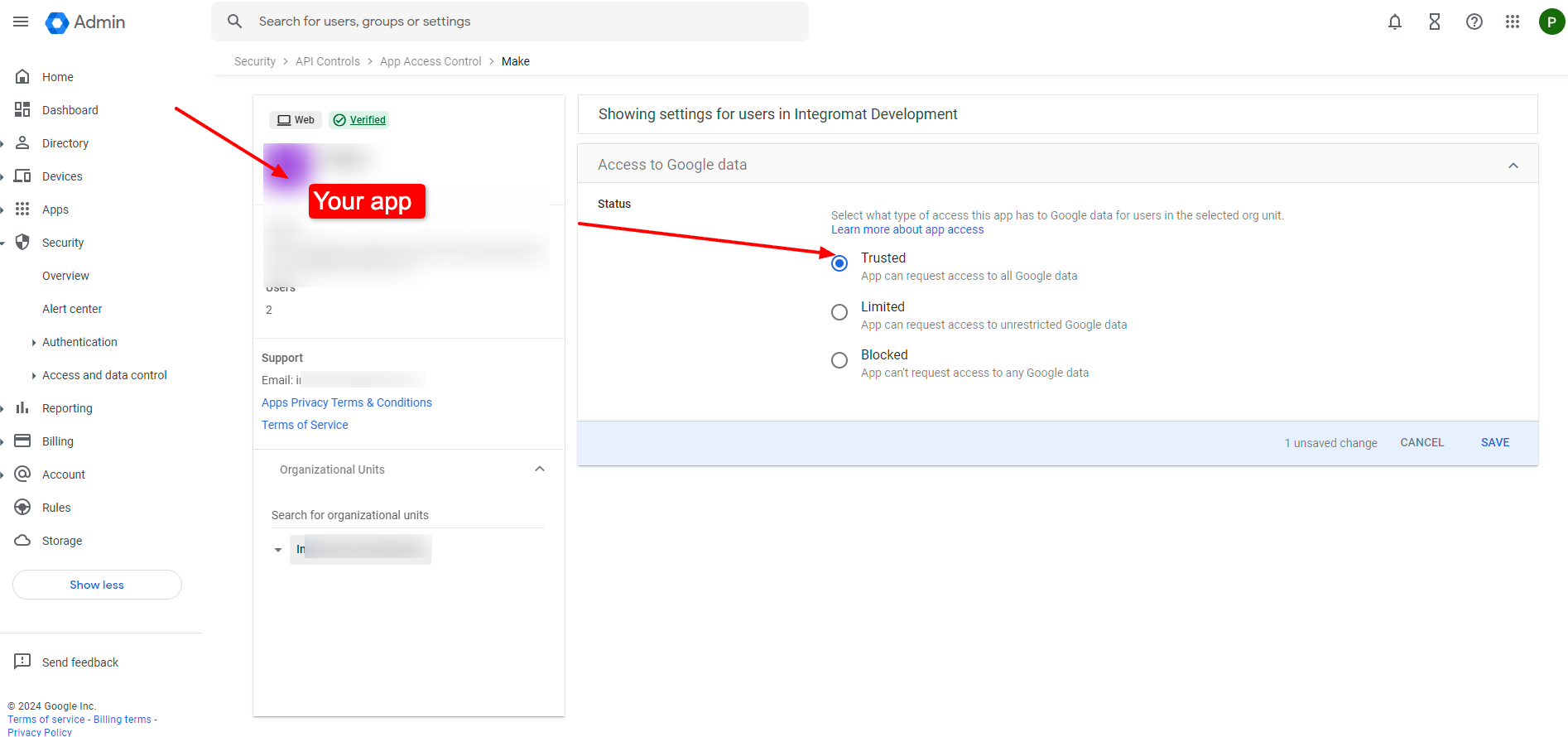

In some cases, for modules that use sensitive or restricted scopes, you may encounter issues with the privacy policy not being properly configured. This is affecting users who have an active workspace (admin console). You can change those permissions as instructed below.